Much Faster CryptoJS SHA1 | |

| May 17th, 2014 | |

|

SHA1 is a pretty crucial part of my current startup, Ultralink. We need (relatively) decent uniqueness calculation and change detection, and need to be able to do it really, really quickly on a lot of content. We picked SHA1 because it’s widely supported, simple to implement, and fast. For the portions of Ultralink that run in Javascript, we needed to include CryptoJS, a third-party library. CryptoJS appears to be the go-to library for cryptographic functions in Javascript, and seemed to fit our bill perfectly. Development was humming along and everything was fine. When we would occasionally do performance passes and stress tests on desktop web browsers, the SHA1 calculation wasn’t even on the radar for perf offenders. Once we started taking a look on iOS devices though, specifically Mobile Safari, it became a completely different story…

Safari 6’s performance profiling tools showed that on pages with a lot of content, CryptoJS.SHA1 was incurring a pretty significant performance hit. My test device is an iPad 3 running iOS 7. Compared to my desktop machine (a 2013 Retina MacBookPro), SHA1 was running over two orders of magnitude slower on the iPad. We looked around for alternative Javascript SHA1 implementations that might run faster, but the ones we found were either slower, or on par with CryptoJS. So I decided to roll up my sleeves and see if I couldn’t squeeze some more performance out of CryptoJS.SHA1 myself.

Old And Busted

It appears like the official source repo for CryptoJS is still in subversion at Google Code. So I checked that out and poked at it until I figured how to build it (php builder/builder.php). I then started to take a long, hard look at _doProcessBlock to see if I couldn’t get a few more percent out of it. At first glance, it seemed pretty tight. Things were laid out nicely and it was really easy to read. It also didn’t look there were any glaringly unnecessary portions that would incur slowdowns. But my instincts from years of optimizing C started to kick in, and I started to think about cache locality, register re-use and the like. So I started tinkering.

Original _doProcessBlock:

_doProcessBlock: function (M, offset) { // Shortcut var H = this._hash.words; // Working variables var a = H[0]; var b = H[1]; var c = H[2]; var d = H[3]; var e = H[4]; // Computation for (var i = 0; i < 80; i++) { if (i < 16) { W[i] = M[offset + i] | 0; } else { var n = W[i - 3] ^ W[i - 8] ^ W[i - 14] ^ W[i - 16]; W[i] = (n << 1) | (n >>> 31); } var t = ((a << 5) | (a >>> 27)) + e + W[i]; if (i < 20) { t += ((b & c) | (~b & d)) + 0x5a827999; } else if (i < 40) { t += (b ^ c ^ d) + 0x6ed9eba1; } else if (i < 60) { t += ((b & c) | (b & d) | (c & d)) - 0x70e44324; } else /* if (i < 80) */ { t += (b ^ c ^ d) - 0x359d3e2a; } e = d; d = c; c = (b << 30) | (b >>> 2); b = a; a = t; } // Intermediate hash value H[0] = (H[0] + a) | 0; H[1] = (H[1] + b) | 0; H[2] = (H[2] + c) | 0; H[3] = (H[3] + d) | 0; H[4] = (H[4] + e) | 0; }

Under The Knife

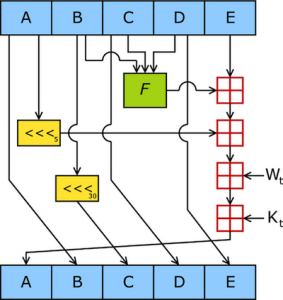

My first thought was that this routine might benefit from a bit of partial loop unrolling. As the main for loop goes over the block, there are conditionals that divide it into sections based on where in the block we are. Each of these sections has it’s own specific operations that need to be performed, but also has a decent amount of common operations between them. So instead of having one giant for loop that iterated over all 80 sections of the block and used conditionals to determine what operations to perform, I broke it into 5 while loops all sharing a common counter (i) corresponding to sections 0-15, 16-19, 20-39, 40-59 and 60-79. This way, instead of requiring up to 4 conditional tests to determine what operations to perform every time a section was examined, we now had zero conditionals. Just this on its own was a huge win. At the cost of some duplicated code, which increased the file size, we now had foregone millions of completely unnecessary if tests which add up very quickly at scale. I experimented with doing a complete loop unroll of a couple of the while loops, but found that doing so had adverse performance results.

Now that we had broken the single for loop into individual while loops with specific logic for each section, we didn’t need to set the variable t and then add to it in a separate operation. By combining the operations into a single assignment, instead of an assignment and an add, we got a very slight but measurable speed boost.

The next thing I noticed was the declaration of the n and t variables. I’m not familiar with the internals of local variable allocation in Javascript, but I suspected that there was probably some significant overhead in the creation and destruction of that little chunk of memory usage within that tiny scope. I was right. Declaring the n and t variables before the start of the while loops and then re-using them allowed us to forego the bring up and takedown of those variables that was previously performed for nearly every single section of a block. Because they’re temporary variables with a very defined usage in the algorithm, we only need to pay the cost of allocating them once at the start of a block and can simply overwrite the old value with the new one each time. This also resulted in a very nice speed boost.

New Hotness

_doProcessBlock: function (M, offset) { // Shortcut var H = this._hash.words; // Working variables var a = H[0]; var b = H[1]; var c = H[2]; var d = H[3]; var e = H[4]; // Computation var i = 0; var t; var n; while( i < 16 ) { W[i] = M[offset + i] | 0; t = ((a << 5) | (a >>> 27)) + e + W[i] + ((b & c) | (~b & d)) + 0x5a827999; e = d; d = c; c = (b << 30) | (b >>> 2); b = a; a = t; i++; } while( i < 20 ) { n = W[i - 3] ^ W[i - 8] ^ W[i - 14] ^ W[i - 16]; W[i] = (n << 1) | (n >>> 31); t = ((a << 5) | (a >>> 27)) + e + W[i] + ((b & c) | (~b & d)) + 0x5a827999; e = d; d = c; c = (b << 30) | (b >>> 2); b = a; a = t; i++; } while( i < 40 ) { n = W[i - 3] ^ W[i - 8] ^ W[i - 14] ^ W[i - 16]; W[i] = (n << 1) | (n >>> 31); t = ((a << 5) | (a >>> 27)) + e + W[i] + (b ^ c ^ d) + 0x6ed9eba1; e = d; d = c; c = (b << 30) | (b >>> 2); b = a; a = t; i++; } while( i < 60 ) { n = W[i - 3] ^ W[i - 8] ^ W[i - 14] ^ W[i - 16]; W[i] = (n << 1) | (n >>> 31); t = ((a << 5) | (a >>> 27)) + e + W[i] + ((b & c) | (b & d) | (c & d)) - 0x70e44324; e = d; d = c; c = (b << 30) | (b >>> 2); b = a; a = t; i++; } while( i < 80 ) { n = W[i - 3] ^ W[i - 8] ^ W[i - 14] ^ W[i - 16]; W[i] = (n << 1) | (n >>> 31); t = ((a << 5) | (a >>> 27)) + e + W[i] + (b ^ c ^ d) - 0x359d3e2a; e = d; d = c; c = (b << 30) | (b >>> 2); b = a; a = t; i++; } // Intermediate hash value H[0] = (H[0] + a) | 0; H[1] = (H[1] + b) | 0; H[2] = (H[2] + c) | 0; H[3] = (H[3] + d) | 0; H[4] = (H[4] + e) | 0; }

I tried a couple of other things that didn’t pan out, and my exact process of arriving at the above optimizations had some intermediate steps. For instance, I initially had one while loop that comprised of sections 0-19 with a single conditional instead of the two while loops covering sections 0-15 and 16-19. At the end of the day though, the combined benefits of all my changes resulted in a reduction of run time on Mobile Safari by 40% (151.8ms down to 91ms) on the large page I was benchmarking on! Needless to say, I was quite pleased. The speedup was visually perceptible and along with optimizations in other areas of the Ultralink code, contributed to making things nice and smooth on Mobile Safari. I didn’t benchmark these changes on my desktop machine and so I don’t know if there are any adverse performance effects there or on other platforms. I suspect though, that by and large, these code changes will have beneficial effects on other platforms as well.

Wrapup

The only (slight) downside to these performance improvements is a small increase in file size. The tradeoff was well worth it though. For the 40% shaved off in time, (minified) file size only increased by 9.1% (4294B up to 4688B).

| Run 1 | Run 2 | Run 3 | Run 4 | Run 5 | Run Average | Perf Gains | Filesize | Filesize Δ | |

| Baseline | 151ms | 152ms | 153ms | 152ms | 151ms | 151.8ms | 4294B | ||

| Optimization L1 | 117ms | 108ms | 107ms | 107ms | 108ms | 109.4ms | 27.9% | 4626B | 7.7% |

| Optimization L2 | 105ms | 104ms | 104ms | 109ms | 105ms | 105.4ms | 30.5% | 4614B | 7.4% |

| Optimization L3 | 94ms | 94ms | 94ms | 94ms | 96ms | 94.4ms | 37.8% | 4691B | 9.2% |

| Optimization L4 | 92ms | 90ms | 92ms | 91ms | 90.0ms | 91ms | 40.0% | 4688B | 9.1% |

This new SHA1 implementation has been deployed for a few months in our development builds and is going to be going out in our next version of Ultralink. You can poke at a copy of my code modifications on GitHub here or just grab the single minified file here. Got any further performance enhancements to either SHA1 or another crypto routine? Feel free to fork my code and contribute back.

May 17th, 2014 at 9:50 pm

I had a need for a faster SHA-1 implementation for mobile in a different context, password stretching functions (PBKDF2), which involve hashing a large number (tens of thousands) of small messages.

There is a high performance implementation of SHA-1 in JS called Rusha – https://github.com/srijs/rusha . The performance is achieved using 1) A similar loop unrolling approach to the one you describe here to avoid conditional branches in the main block processing function. 2) Using the asm.js subset of Javascript for the SHA-1 core which is well optimized by JS engines, whether they support asm.js explicitly or not.

For my particular use case where there are large numbers of SHA1() calls as well as a large total number of bytes being hashed, it was profitable, especially on mobile and for slightly older browsers, to take another leaf from the C book and modify Rusha to do all of its work using existing buffers and avoid allocating and initializing new buffers on each call – https://github.com/robertknight/1pass-web/blob/master/lib/crypto/pbkdf2.ts

May 18th, 2014 at 7:13 am

Chiming in here to mention SJCL (https://crypto.stanford.edu/sjcl/). I needed PBKDF2 to be fast rather than SHA1 in isolated usage. I found that CryptoJS is really really slow at that task (I’m calculating 100,000s of iterations), but SJCL is magnitudes faster.

According to this benchmark (https://github.com/dominictarr/crypto-bench/blob/master/results.md), rusha does seem to be top for SHA1. SJCL is best for PBKDF2 even though its SHA1 speed isn’t top. Looks like SJCL’s implementation is better suited for PBKDF2.