OpenGL Game Optimization for iOS | |

| April 22nd, 2011 | |

|

For the past month or two I have been lightly seeding to Cannonade to testers. I have gotten the strong impression that the “fun” of my game was unfortunately getting lost in the low framerate. There is a certain reaction in the human brain that I am trying to evoke by making the blocks explode and collapse in physically realistic ways. However, when the framerate is too low the perception of motion is lost and so is my core game mechanic. I first spent a lot of time optimizing the execution of the Bullet physics engine and found a significant speed increase by tweaking compiler settings. I found that the execution of the physics simulation on iOS devices was very strongly locked to CPU performance. Now the time had come to start attacking the performance of the graphics engine. I was hopeful that I would be able to find even more significant speedups in this area because the graphics engine was custom written by myself and I could also employ device specific optimizations to take advantage of specific strengths or avoid specific weaknesses. Many of the techniques I tried out were nothing that hasn’t already been done before but I thought it would be worthwhile to document my experiences executing them in my specific situation. These are the results of a week and a half of aggressive performance benchmarking, exploration and experimentation. It goes without saying that my results are very data set specific and so they could perform better, worse or the same when applied to a different iOS app.

Testing Methodology

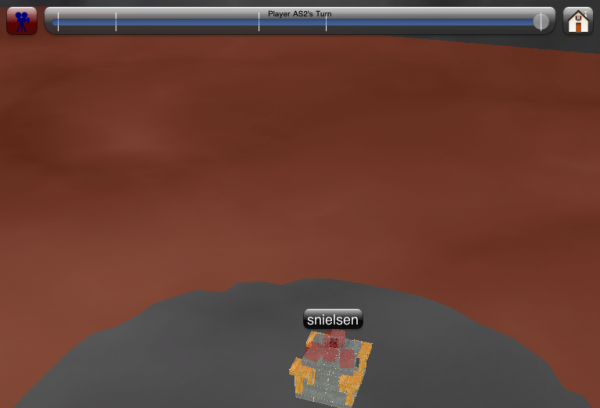

The way I measured performance was to replay a match between two of my test accounts. I had the auto-camera turned on so the scene was constantly being rendered from a different perspective. Hopefully this constant motion represented some of the more performance expensive frames my game would need to render. My performance metric was to measure how many seconds a device took to run through the entire replay with specific graphics configurations (because my game was running far below 60 frames per second for most of the testing, I didn’t need to worry about the upper benchmark cap of 60 Hz). This was not quite a perfect metric because the auto-camera’s movements are based on real time and not simulation time. This means for example that if the camera happens to be looking at the scene such that there are a lot of translucent fragments drawn behind an opaque object which has already been rendered, that the frame could be drawn much quicker than if those fragments were in front of the object from the camera’s perspective. Thus a graphics optimization could speed up the simulation such that it could inadvertently create more work than a slower running configuration. I came to the conclusion that the level of variation was probably acceptable for my situation and decided to leave the auto-camera functionality how it was. I now had a benchmark with which to test my graphics engine changes. Hardware tested was a 1st generation iPad (4.3.1 GM) and an iPhone 3GS (4.3.1 GM).

This is a grainy webcam of the benchmark replay after most of the optimizations in this article had been applied. I apologize for the quality. I am in search of a cheap way to get good quality video off of an iPad.

A Measurement Conundrum

I started out by trying to measure how much each part of the graphics engine was taking up in the overall rendering process. I did this by running the benchmark with various portions of the engine turned off (terrain, blocks, particles, skybox, audio, UI, etc…) and measuring what kind of speedup occurred. I charted the data and thought that I had a pretty good idea of how much time each section was taking. I was wrong. I started to do performance runs with various combinations of components turned off and found that the proportion of execution time for a given engine component could vary wildly depending on which other components were active at the same time. One very pronounced example of this was that of terrain rendering on the iPad. Terrain rendering in Cannonade has two portions, first I would render a textured tri-strip mesh of the actual terrain and then I would render a single translucent textured quad which would intersect the terrain mesh to produce a liquid-like visual covering portions of the terrain mesh that are below the “waterline” (in the only level I have built so far that liquid is lava). For one run I configured things such that only the liquid quad would render and I would skip the terrain mesh. I thought that the measured speedup from not drawing the terrain mesh would tell me how much it was costing me. To my great surprise it ran significantly slower with the terrain off. In examining what might be the cause of the performance degradation I observed that during the run the lava was visible on about 30% of the screen on average (when the terrain was turned on). With the terrain mesh rendering turned off this caused the lava to be visible on almost 100% of the screen. On the iPad the cost of alpha blending fragments is so high that even though the terrain mesh rendering costs a little more in vertex processing and caused some overdraw (drawing fragments more than once) it was faster overall because it caused the 70% of the lava fragments that were not visible to skip the alpha blending process altogether. One other reason that I could no longer reliably isolate the exact contribution of a particular graphics engine component was that I found many optimizations which would seem to have no discernible effect on the iPad but would occasionally introduce a large speedup on the iPhone 3GS. I concluded that in many cases I would just have make numerous runs with various configuration permutations on both sets of hardware and just see how low I could get the benchmark time to go. I figured that if I can find configurations that will run optimally on both the 1st generation iPad and the iPhone 3GS, it would run fine on any supported hardware (armv7 and forward) both current and future.

I was hoping to be able to produce very informative tables and charts which would identify exactly how much each optimization contributed to the overall speedup of my final configuration. After discovering the aforementioned conclusions it was clear though that any hard numbers presented would be not very meaningful. So I have decided to describe each of the optimizations I attempted and give an approximate estimate from my data as to how much of a speedup it gave me on both the iPad and 3GS. Some of the techniques below were more deeply investigated on the iPad than the 3GS. Optimizations are roughly grouped by type and are not chronological. Feel free to skip sections that interest you but keep in mind that some sections build on what has come before. So without further ado below are all the graphics optimizations I tried in my week and a half I budgeted for graphics optimization:

![]() == I decided to go with this optimization.

== I decided to go with this optimization.

Lava

Colored Lava

When I started doing performance investigation I had not yet created any interesting lava texture to blend in over the terrain mesh and so at the time I simply had a plain single color texture. I thought I could get some nice speedups by foregoing the texture lookups for all the lava fragments, especially on the iPad. It didn’t yield as much performance as I had hoped but it did speed things up a tad on the iPad. There was no discernible difference on the 3GS.

Non-Blended Lava

At one point later in the optimization process, I was curious as to just how expensive alpha blending the lava texture is on the iPad. I was astonished to see a tremendous speedup when I turned lava blending off. The performance increase was so great that I decided this was a change I needed to take even though there would be a compromise on image quality. Fortunately this quality compromise was fairly easy to design around. I decided to spend some time making a good-looking lava texture that would look descent all on its own instead of having the lava blended over the terrain mesh texture. Obviously this didn’t have quite as drastic of an effect on the 3GS but nonetheless it also experienced a significant gain.

No Lava Depth Mask

Because a lot of fragment processing time is taken up with drawing the lava I tried to reduce fragment processing down to the bare minimum I could get away with. One thing that I thought of was to disable writing to the depth buffer during lava drawing (the depth test is still on though). The lava is drawn after the terrain mesh. The had a very slight affect on performance on the iPad but not significant. It also has a side effect that any objects drawn afterward like blocks and particle emitters will be drawn over it incorrectly. Although I do not like the incorrect drawing effect I am still on the fence about taking this one because 9X% of the time the action is going to be on top of the terrain mesh and any blocks/projectiles/etc that get pushed under the surface of the lava will sustain damage and will not be exist very long in that inconsistent drawing state. Probably not worth the minor speedup.

Terrain

Sub-Lava Terrain Removal

Once I had decided to turn off lava texture blending I thought about how there was a lot of wasted terrain mesh triangles that were being rasterized even though they were just going to eventually be written over by the lava. I decided that since they would never be seen (although they would still be simulated physically) there was no point having them go through the graphics pipeline. Instead of actually reducing the number of triangles in the mesh, I would modify the position of the vertices so that all the triangles under the surface of the current waterline were degenerate. This made it so that even though the same number of triangles are being pushed through the pipeline, all the ones under the surface of the lava have zero area and thus do not produce any fragments at all. Doing things this way could also become handy in the future if I ever needed to change the waterline or perform terrain deformation I wouldn’t have to reconfigure the terrain VBO I would just have to update the data in the VBO (perhaps an update to a GL_STATIC_DRAW VBO would be no faster than just creating a new one). This provided a small but measurable performance increase on the iPad.

Terrain Reduction

Very early in Cannonade’s development I found things to be running very slowly. This was before I coded anything with performance in mind and was just trying to get stuff up and working. I found a significant initial speedup by adding an algorithm that would calculate a quadrilateral area in the terrain array that would roughly encapsulate the currently visible terrain vertices. No sense in drawing terrain behind or off the camera right? This gave me the performance I needed at that early stage to regain some responsiveness to the interaction. There is a cost in calculating that visible set however. Because the visible square of terrain is not laid out linearly in RAM I needed to memcpy() those visible sections to a new buffer. Essentially this is moving more decisions of whether to draw triangles that are not visible in the view frustum from the GPU to the CPU. Interestingly this terrain reduction algorithm only seemed to give better results on the iPad. On the 3GS it appeared that simply passing the entire terrain buffer (even the stuff that isn’t visible) to the GPU was more efficient overall.

Partial Terrain Reduction

An enhancement to the above technique was to calculate a quadrilateral area of the visible terrain but to extend the sides such that the entire quadrilateral was allready linearly laid out in the terrain memory/buffer. This made it so all I had to do was specify a linear chunk of the complete terrain memory/buffer. Unfortunately I never found a configuration on either device where this algorithm seemed to be optimal. The iPad always tended to be a little faster with the more precise reduction algorithm described above despite the memory copies and the 3GS appeared to go slightly faster with no terrain reduction at all. Of course things could change completely if my terrain resolution were to increase or decrease.

Short Terrain Vertex Positions and Texture Coordinates

The single most significant thing that me and my friend Mike Smith did to increase the framerate on Caster for iOS back in 2009 (check out my article Caster for iOS: A Postmortem) was to adopt a really compact, reduced and aligned vertex format for our terrain vertices. Naturally this was one of the first things I thought might help when I sat down to optimize Cannonade. For terrain I just had position and texture coordinates both of which were in a float format. I could afford to lower my precision and use shorts for both position and texture coordinate data. I initially had a false start because I had forgotten to make sure that my entire vertex format was aligned to a 4 byte boundary. I added 2 bytes of padding to the end of the position attribute which then gave me a total of 12 bytes per vertex (3

shorts for position + 1 short for padding + 2 shorts for texture coordinates). It turned out that doing so actually had a slightly detrimental effect on performance on the iPad and a slight increase to no effect on the 3GS. One possible explanation for this different than expected outcome could be that the overhead of needing to introduce a scale factor for the GL_TEXTURE matrix mode (and maybe to a lesser extent the needed scale factor on the GL_MODELVIEW matrix mode as well). It could also be that such an optimization could be beneficial if the volume of vertices that I push were to rise significantly. For the time being I have decided to stick floats in my terrain vertex format.

Interleaved Terrain Array

As it is said in many places, it is typically best to interleave your vertex attributes in one array as opposed to keeping separate arrays for position, texture coordinates, color etc… I could afford to interlace my terrain’s position and texture coordinates because both are expected to be relatively static throughout most of the game (not completely though). I found it made little difference to interleave my terrain attributes when not using VBOs (perhaps because of the relatively small amount of vertex data I am pushing through). I decided to keep going forward with an interleaved position and texture coordinate structure because I thought it might yield a better result when coupled with a VBO or if I significantly turned up the amount of vertices I am using for terrain.

Interleaved Terrain Array VBO (Unindexed)

Because the terrain mesh does not change very often it only makes sense to keep it in hot, GPU owned memory as long as we can. So I created an unindexed VBO for terrain vertices and had it draw using that instead of just passing the pointer to the memory every time (when there was no terrain reduction I was able to use a VBO with a GL_STATIC_DRAW policy). I found very quickly that if I did not call glBufferData(GL_ARRAY_BUFFER, size, nil, GL_DYNAMIC_DRAW); before updating the VBO with new data that performance was absolutely dismal. Once I got things configured correctly VBOs interestingly only seemed to have a small effect on either the iPad or the 3GS. Depending on both the terrain reduction policy and the vertex data format, it experienced both a slight increase and decrease in performance from the baseline of just passing a pointer to glDrawArrays() every frame.

Interleaved Terrain Array VBO (Indexed)

The next thing to try was going to be an indexed VBO for the terrain mesh. This meant that all of the terrain vertex data could live in a GL_STATIC_DRAW VBO and I could just send up indices to the GPU to tell it what to draw. That generally meant there was less data that needed needed to be sent to the GPU in any given frame. In most cases (but not all) drawing indexed terrain appeared to be beneficial but only by a small to moderate amount. It sped up the 3GS moreso than the iPad.

Interleaved Terrain Array VBO (Indexed) + Element Array VBO

The next thing to try was putting the indices in a VBO as well. This also seemed to produce a slight increase but still very small. Again the overall increase seemed more dependent on whether this optimization was coupled with a specific terrain reduction technique on a specific device. This appeared to be the fastest option on the iPad when terrain reduction was turned on with floating point position and texture coordinates. Even though there were some runs on the 3GS that appeared to be faster with an unindexed VBO or an indexed one with pointer fed indices, the results were so close that for simplicity’s sake I decided to go with this configuration.

Blocks

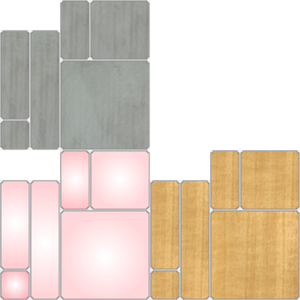

Block Texture Atlas

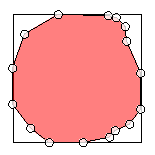

Originally, when drawing each block I simply bound its corresponding texture which was based on the block’s material type. This constant switching of which texture is currently bound is of course inefficient. So I constructed a texture atlas of all potential sides of every material. That way I can simply bind the one atlas texture every time I want to start drawing all my blocks. I then have to construct more specific texture coordinates for each side instead of just simply specifying the edges of the texture (0,0 0,1 1,1 1,0 which also have the nice characteristic of being able to easily be stored in GL_UNSIGNED_CHARs without any scaling in the GL_TEXTURE matrix mode). I had coupled this texture atlas change along with all of the Block VBO optimizations below because I don’t know of any way to change which texture is bound in the middle of an array draw in OpenGL ES 1.x. I suspect that it probably contributed to a significant overall performance increase on both devices but I didn’t test it in isolation of the Block VBO changes. One thing to watch out for in a texture atlas is that sometimes the texture lookups can sample slightly over your intended area (This led to very subtle dark lines on the edges of some of my blocks that bordered unused portions of the texture atlas. See below). So in my case I needed that make sure that anything that did get sampled outside my block borders would be ok if it were displayed.

Tri-strip Blocks

The way I have been drawing my blocks has been relatively vertex expensive. I have been sending GL_TRIANGLES which means that for unindexed arrays I need to push 36 position vertices (8 unique) everytime I want to draw a block. So I could either calculate 8 unique vertices, make some copies of them and push 36 mostly redundant vertices or I could calculate and push the 8 unique vertices and then push 36 mostly redundant indices. I thought that this was pretty excessive and so I investigated an alternative method of drawing the blocks. I couldn’t do two GL_TRIANGLE_FANS because I don’t know of any way to end the fan primitive mid draw command in OpenGL ES (NV_primitive_restart looked like an interesting idea in this regard but it has never gotten traction). It is generally accepted that GL_TRIANGLE_STRIPS is a fast and efficient way to draw stuff because of its nice vertex reuse. Because none of my blocks are connected to each other I could insert duplicate vertices at the beginning and the end of the blocks to introduce degenerate triangles which would allow me to use one giant array of tri-strip vertices for a bunch of blocks. I found a way to use tri-strips to draw a block with only 14 vertices and with the first and last vertices duplicated this meant that I could get away with pushing only 16 vertices per block instead of 36. This did give me a nice but small speedup. However, I ran into a problem when it came to texturing. For a 1X1X1 block texturing was fine because I could produce a path of texture coordinates with a 1 to 1 relationship with the vertex positions such that it traced over the same single texture area for each side of the block (ie, the same texture is mapped onto every side of the 1X1X1 cube). However, when trying to find a path for irregular block shapes like 1X2X1 and the like, I could not find a texture atlas arrangement that would serve all the different shapes without horrendously wasteful space use in atlas. I did find some ways to create smaller atlases that could compactly serve 2 or even 3 of the different kinds of block types through clever use of texture wrapping. But I never found one that would serve all the different types I am currently using (much less those I might need in the future). Because I have been planning on potentially adding more types of block shapes in the future and doing a full tri-strip UV unwrap of every block type would be texture memory prohibitive, I decided to abandon this optimization for the time being. If I decide that I don’t need textured blocks or if I decide that I don’t care if the side textures stretch on the bigger blocks then I might re-visit this.

Block VBO (Unindexed)

Drawing blocks in Cannonade is an interesting scenario because there are long sets of frames where specific bunches of blocks are being updated and many long sets of frames where specific sets are perfectly still. We need the ability to continuously update the positions of the blocks but the texture coordinates can remain the same throughout the lifetime of the block. I decided to create a block position VBO and update the vertex positions as needed with glBufferSubData(). When a block was destroyed I would update each of its vertex positions to 0.0 so that I wouldn’t have to reorganize or re-create the VBO. I found some speedup in drawing blocks with a VBO on both devices but the real gain came when I stopped updating each moving block’s position piecemeal using glBufferSubData() (see below).

Block VBO (Unindexed) + Batched Block Updates

I had thought that it might be more efficient to just update the portions of the VBO as they changed because most of the time, most of the VBO was not changing. As it turns out, it was faster in every one of my configurations to just upload the entire contents of the VBO every frame instead. This produced a nice speedup especially on the 3GS.

Block VBO (Indexed) AND Block VBO (Indexed) + Element Array VBO

I did not spend much time with these changes because it meant that even though I was now reducing my vertex data down to 8 vertices per block (plus 36 indices) there still needed to be not quite 36 sets of texture coordinates (depending on texture re-use for various sides) and I would have to figure out the correct reduced set of unique texture coordinates plus the right set of an additional 36 indices just for the texture coordinates. I decided that first I would just reuse the position indices for the texture indices even though they would not make sense on some of the sides of the blocks so that I could gauge whether there were performance gains worth going after. It appeared that both the indexed Block VBO and the indexed Block VBO + Element Array VBO configuration did not make a significant difference so I abandoned doing any more investigation down those roads right now.

Per-player Block VBO

A general pattern that has emerged in the updates to the Block VBO is that when positions are being updated they are typically all blocks belonging to a single player. Thus if I were to give every player their own Block VBO then those players without any moving blocks could avoid the data update. Interestingly, doing so didn’t appear to have much of an impact although I suspect that it would in games with more than just two players. I decided to keep the optimization and expand the concept to not just one Block VBO per player but one opaque and one translucent Block VBO per player. That way I could enforce the opaque first drawing order and also do things like turn off drawing of a player’s translucent Block VBO when it shouldn’t draw the plasma blocks (currently the plasma blocks are the only translucent blocks so I might have to change this eventually).

Short Block Texture Coordinates

Like the terrain vertex format, I thought I might be able to squeeze out a little more performance if I reduced the float texture coordinates with short ones. I didn’t consider converting the position values from floats to shorts as well for three reasons. One was that I thought I might actually need the accuracy of floating point values to represent the block positions when they are moving very slowly. Next was that I didn’t want to have to come up with a value range for the positions because I don’t know what a reasonable one is yet (I would need a value range in order to scale the short values correctly). Lastly I thought that the conversion from the physics engine’s native internal floating point storage format to a short would be costly and offset any gains. So I havn’t actually tried making the block positions shorts but with the other gains I was making in other areas I had to chose my time wisely. I did however try out short texture coordinates which appeared to not have any significant effect on either device.

Other

CADisplayLink

Originally (and there is a chance this could still happen in the future) Cannonade was conceived as a live, actively connected internet game. All the local and internet game code is still present in the codebase and works beautifully. It keeps all game clients smooth, reaction times responsive, determinism is upheld and players are kept relatively sync’d with each other timewise (explaining how it works is a subject for an upcoming blog post sometime). One thing that was necessary to accomplishing all those features was the ability to dynamically and very specifically throttle the framerate on every connected client. Because of this I couldn’t use CADisplayLink for a long time. I know you can somewhat configure its firing time but it is only on the granularity of one screen refresh interval. Thus I had used an NSTimer so that I could finely tune the framerate on each client. In my decisions to pare down and simplify Cannonade as its development has gone forward, I had decided to focus on Cannonade as a purely disconnected, asynchronous game. This meant that I could now allow each player in the game to run the game engine as fast as it could and so using CADisplayLink for event loop timing was now an option. I was pleasantly surprised as it gave me a nice little bump on both devices. I suppose I should have expected as much because now the drawing of the frame is more closely synchronized alongside the moment the framebuffers need to be swapped.

16-bit Depth Buffer

Switching between a 24-bit depth buffer and a 16-bit depth buffer didn’t seem to make much of an image quality difference in my scenario. It seemed to make a slight difference in speed on the iPad. On a somewhat related note, Cannonade has always had a color buffer of format RGB565 (I havn’t run any comparisons between that and RGBA8).

Fanparticle

|

|

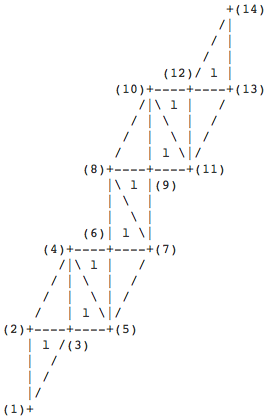

One neat technique that I learned from last years OpenGL ES Optimization WWDC session video which I hadn’t thought of before was sprite trimming. The idea originates in the fact that many transparent blended textures (like ones used for smoke effects and the like in particle systems) have a ton of completely transparent wasted pixels in the corners. All of those completely transparent pixels which have no effect on the final image outcome still take up valuable fragment processing time. So what might be valuable if you are drawing 100s of blended particle textures (like Cannonade is doing) is to tradeoff more vertex processing for less fragment processing by rendering the particle texture as an n-gon triangle fan (or similar concept) instead of rendering the particle as a quad made of two triangles. On the iPad I found that doing this had a noticeable small to moderate speed gain which seemed to emphasize yet again how often the iPad is fillrate limited. I have not done testing yet to see what effect it had on the 3GS.

Disabled Fog

This one was the big one. Especially on the iPad (ding! fill rate). Turning off fog on the iPad instantly gave the iPad around a 2X speedup in framerate. I guess I should have thought of this one earlier in the process. I was however using fog as part of my design to make the arena (which had walls at the time) seem bigger than it was. For this large of a speedup, the decision to design around not having glFog() was a no brainer. I decided to get rid of the walls and instead extended the idea of the lava going on in all directions. I changed the lower half of the sky box to be the same color as the lava so that it would look like it went on forever into the horizon. I actually think that it looks better the way it is now compared to before.

No color buffer glClear()

I had read in a few places that no longer clearing the color buffer (if you don’t plan on using it) could potentially yield some gains. I didn’t find that to be the case but perhaps there is some missing configuration that I didn’t have set up correctly in my tests.

Local ModelView

One thing I had been doing for a long time was getting my model view and projection matrices (for use in game interaction) from OpenGL ES using glGetFloatv() (yes I know, I am lazy). I knew that glGetFloatv() was expensive but I didn’t know just how much until after I turned it off. I finally got off my butt and started maintaining the model view and projection matrices locally in Cannonade myself. This meant that instead of a series of glRotate() and glTranslate() calls to place the camera, I perform the matrix rotations and translations myself and then just make one glMultMatrix() call to actually configure the camera. Then I no longer needed to query the OpenGL state machine for those values because I already had them. This seemed to give a small but noticeable speedup on both the iPad and the 3GS.

No UIKit

I know that using UIKit on top of OpenGL is a big no-no in general but I have compelling reasons to use it. Believe me, I have gone back and forth on implementing everything in OpenGL but I have been there and done that and don’t want to go back. The primary reason I am using UIKIt is that I want full unicode text support because I want Cannonade to be easily localizable and very language portable. Another reason is that I don’t want to have to re-implement my own event handling chain along with sliders and other widgets that I just get for free with UIKit. I also like being able to make custom CoreGraphics backed UIViews and take advantage of all the nice matureness that it entails. In tests where I stopped placing any UIKit on top of OpenGL at all there was a moderate speed boost. This made it a hard decision to decide to keep UIKit in the game for now. The re-implementation of soo much of the wheel was really the kicker. I just can’t justify spending development time in that area right now when so many other areas need the attention. Instead I have been thinking of clever ways to reduce UIKit usage like trying to figure out when I can fade out all the UIKit widgets during high action sequences and the like.

Opaque UIKit

By default all of my custom CoreGraphics backed widgets had some translucency. Even though the background of all of them is [UIColor clearColor] I thought that maybe if I turned all the actually drawn pixels to completely opaque (so that no blending has to occur when compositing those pixels in Core Animation) that might have an impact. I didn’t do extensive tests but it did seem to have a slightly positive impact but nothing too significant.

Split Draw

I had an idea that maybe If I initiated certain expensive portions of the graphics engine and then switched to start the physics simulation and perform other CPU work instead of the rest of the graphics work that maybe the active CPU and GPU would overlap more with non-blocking work. So I moved the sky box, terrain and lava rendering to before the physics and gameplay simulation. The rest of the graphics rendering like the blocks and particle systems would start once all the physics and gameplay were done being computed on the CPU. This didn’t seem to have any significant impact. I didn’t have any more time to look into this one but sometime in the future I would like to become proficient in being able to detect CPU/GPU stalls and then use similar techniques to work around them.

One Frame Ahead Physics Calculation

This was a similar idea to the one above. It was the idea that instead of performing the physics simulation at the beginning of the event loop that it would be put at the end after the framebuffers had already been told to swap. This too did not lead to any discernible difference in execution time.

More PVRTC textures

|

|

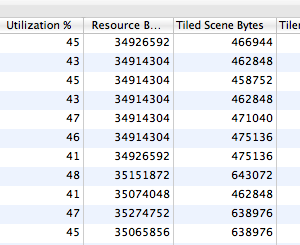

Once I had piled on a number of optimizations and gotten the engine running at a nice 60 fps on the iPad I was pretty happy with myself. However, there was this weird little performance cliff that I could seem to reliably fall off of which would cause the framerate to go from a steady 60 fps to a steady 50 fps. The funny thing was that it was during scenes where no action was happening, nothing was moving and the camera was standing still. But there it was, a -10 fps hole depending on from what angle I looked at the scene from. A few degrees up, down, left or right and it was back to 60 fps. I used Instruments to try and identify what was going on during those two different viewpoints that was causing the sudden 10 fps dip. I noticed that there was a correlation between the value of “Resource Bytes” and the framerate. It seemed that whenever “Resource Bytes” seemed to creep over 32MB I would get hit. This made sense because I had read that 32MB is the limit of fast active GPU memory on the SGX (24MB on the MBX). So I set out to see what I could do to lower my texture memory usage. At the time I was using big and expensive RGBA textures for the sky box, terrain texture and lava texture. I decided to convert them all over to 4BPP pvrtc and see if that had any effect. It did seem to lower my overall texture memory usage and kept me from falling over the 32MB cliff as often (I still did fall off occasionally though). Unfortunately the image quality compromise on the sky box was noticeable. The lava texture no longer seamlessly melded into the lava colored sky box and so I had to re-consider the my use of pvrtc for the sky box. The terrain and lava however looked pretty good and I will probably decide to keep them pvrtc.

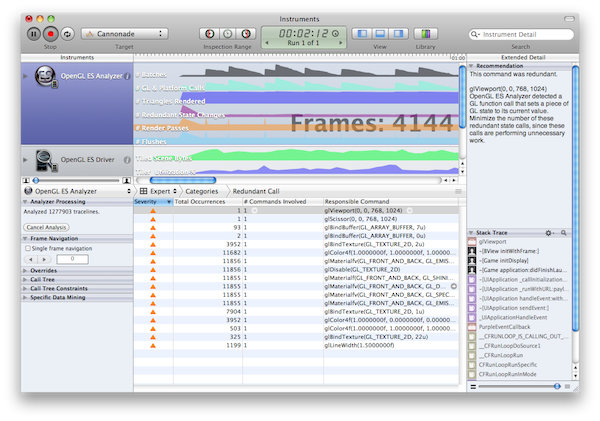

State Change Minimizations

One OpenGL optimization that has never gone out of style has been to do all you can to minimize the number of state changes you perform and to get rid of redundant state changes altogether. The OpenGL ES Analysis Instrument in Xcode 4 is extremely useful in this regard. In addition to keeping detailed logs of every single OpenGL API call, it inspects whether state change calls are redundant (ie, they attempt to set variables to a state they are already set to). With this instrument I was able to quickly eliminate many wasteful state change calls. I also did a top to bottom analysis of my engine graphics pipeline to see if I could coalesce certain sections together by common state. I also found out what state values should be considered the “default” for my specific engine setup so as to minimize the number of times I would have to change from the default. Each of the changes I made only had a very slight impact individually but collectively all of my OpenGL state related changes amounted to a small but noticeable speed bump.

Still need to look at

Although I looked at quite a few different ways to optimize and squeeze as many frames per second out of my base supported hardware there are still avenues that I am aware of but didn’t quite get to in my week and a half of graphics engine optimization. One of those is investigating whether using glMapBuffer() to update VBO data would be any faster than glBufferData() and friends. Another one I didn’t have time to look into was whether collapsing a lot of my state setup into VAOs would have made any noticeable impact. As was mentioned in the tri-strip blocks section, I would eventually like to re-visit the idea of using that rendering methodology for the blocks once I figure out a good way to create the requisite texture atlas efficiently. The single biggest yet-to-be-unturned stone that I am aware of is that of OpenGL ES 2.0. There are a lot of things that could potentially be done faster and more efficiently in a shader (the particle system immediately springs to mind). Cannonade’s graphics engine was originally written against OpenGL ES 1.x because it was going to target the armv6/MBX class hardware. Now that the minimum system has been upgraded to armv7/SGX I can afford to move to OpenGL ES 2.0 but a complete re-write would not be trivial. Seeing that I have already gotten some really nice speed gains, I havn’t felt the need to do so quite yet (it will happen at some point though).

Conclusion

When all was said and done, after a week and a half I was able to get about a 3X speed increase on the 1st gen iPad and about a 2X increase on the 3GS. I am very happy with my results. It is true I wasn’t being extremely performance conscious in my development previous to this point so I had a lot of room to make gains but I sure wasn’t being wasteful either. If it wasn’t obvious from the numerous mentions above, the big performance problem to watch out for on the iPad is anything that will cause more fragment processing or more lengthy fragment processing. As for the 3GS, it benefitted from vertex optimizations more and in general the hit on the CPU was more significant (which was expected due to the clock difference). I certainly had fun learning more about iOS’s Open GL ES implementation and hardware interaction. It is my hope that others who are currently looking for ways to speed up their OpenGL ES app have found at least one idea here that they had not thought of. Got any other techniques that you have investigated or tried out? Tell me about them in the comments below even if they didn’t work out. You never know where the inspiration for the next useful graphics optimization might come from.

July 17th, 2011 at 10:08 pm

Awesome! Thanks for publishing your findings! I hadn’t thought about the issue with fog on the iPad. Definitely one to consider.

May 10th, 2013 at 6:55 pm

The 4-byte (32 bit) align fixed an iPhone 3GS slowdown for me, thanks! I was previously using 8 bytes per vertex – 3 shorts for pos, then bytes 6 and 7 for tex id (array) and ‘brightness’. By padding 6+7, and using 8+9 instead (also padding 10+11) the 3GS is rendering 4x faster! iPad 1st gen didn’t need the padding.