CloudFlare as a DB Read Cache | |

| September 14th, 2013 | |

|

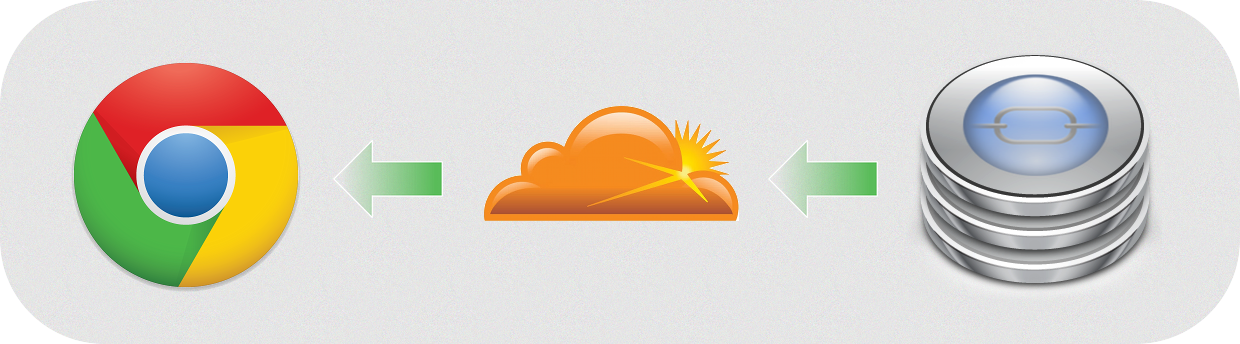

CloudFlare is a popular, DNS-level proxy service that has layered on tons of useful features over the years like asset optimization and denial of service attack mitigation. Probably the most prominent service they provide though is caching. This caching functionality is primarily geared towards webpage content. Out of the box, CloudFlare’s caching settings work great and it intelligently decides what kind of files to cache (images, css, javascript) and what not to cache (html or other files that could contain dynamic content). You can tune CloudFlare’s settings to fit your specific needs and do a lot to lighten the load on your server. I started to think about all the interesting things I could do with a distributed caching layer that sits in front of my servers. I was designing the back end of ultralink.me at the time and thought: “Gee, CloudFlare might actually make a pretty decent read cache!“.

Now I have to start this article with some caveats. Using CloudFlare in this manner will not solve all your problems or even be appropriate in many cases. But if you do have a certain kind of workload and can expose the interface to your database in a specific way, it can really do a lot to absorb your database read traffic.

Test Case: ultralink.me

The core problem flow that ultralink.me deals with can be described like this:

- Client (browser extension, site plugin, etc) wants to know what ultralinks are present in a paragraph of text (on a webpage for example).

- Client contacts ultralink.me with the text and asks for a list of the ultralinks it contains.

- ultralink.me leverages it’s large repository of ultralink data, analyzes the text and responds to the client with the answer.

Now lets start restructuring the problem so that it can work more quickly and efficiently. The most intensive operation in the chain is the analysis of the text when it arrives at ultralink.me. Anything we can do to avoid that analysis will be a performance win. So obviously we can keep a cache in our database of the answers for any given chunk of text that comes in. That way, if a client asks for the ultralinks in a chunk of text that ultralink.me has already seen, the database can serve up the answer from it’s cache. The database cache on the server is continually added to and cleaned out as the ultralink database changes or as the data in the cache gets old (we just nuke anything over 31 days old).

Hash It Up, Fuzzball

For the client to ask the server to analyze a specific chunk of text, it doesn’t always need to send the text itself to the server. Instead it can create a hash of the text (content hash) as well as a hash of the URL it was found on (content URL hash). The client can then send this pair of hashes to ultralink.me. The hash pair is sufficient information for the server to lookup the correct answer for that chunk of text if it is present in the cache. If the answer is not found in the cache, then the server reports back to the client that it needs to send the text chunk up for analysis (incurring an additional round trip).

Fortunately for us, we anticipate that as we scale up and gain more users that the likelihood that the answer is going to already be present in the cache is going to be increasingly high (the same popular websites and articles are always hit by a ton of people). Eventually the vast majority of requests coming in are going to be simply served up by the database cache (making our workload mostly read). Now let’s see how to get CloudFlare to do that for us.

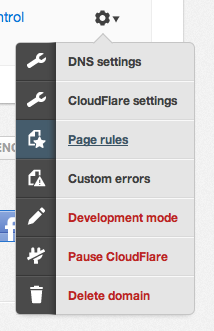

Ugh, You and Your Rules

CloudFlare’s caching policies can be finely tuned in the ‘Page Rules‘ section of their dashboard for your website. The rules are based around URLs and so we are going to have to structure our database interfaces around a specific URL scheme. When a client contacts ultralink.me with the hash pair, instead of passing them as GET or POST parameters, we use them to construct the URL itself. The client simply performs a GET on a URL that looks something like this: (server host)/API/(content URL hash)/(content hash). The server picks up the hash pair from the constructed URL and performs the cache lookup. Now that the information passed to our database is completely contained inside the URL, we can make a custom CloudFlare page rule to cache the results that are returned from these URLs.

In the ‘Page Rules‘ section we make a new rule that goes like this:

| URL pattern | *(server host)/API/* |

| Custom Caching | Cache everything |

| Edge cache expire TTL | Respect all existing headers |

| Browser cache expire TTL | (as low as your plan allows) |

All the other options can be turned off or left at their defaults. We want to make sure that this caching policy is going to cover any combination of hashes present in the URL and that it will always be cached.

Edge cache expire TTL indicates how long (maximum) CloudFlare keeps the result in it’s cache. Our API endpoints need to have control of this and so we make sure the setting is “Respect all existing headers“. CloudFlare makes no guarantees as to how long something will actually remain cached in any of their datacenters (this can vary due to regional load, frequency of access, what specific plan you have, etc) but at least we can give it a hint.

Browser cache expire TTL indicates how long the client (ie a web browser) can or should keep the result in it’s own cache. We generally set this one as low as we can so that when there are database changes which affect the content behind hash pairs, it wont be too long before the client can contact our server again (by way of CloudFlare) for an updated answer. Exact web browser policies on XHR result caching vary (Chrome is very aggressive).

Major Tom to Cache-Control

Back on our server, we now need to make sure that our API endpoints return the correct ‘Cache-Control‘ header so that CloudFlare’s datacenters know what to do. If the hash pair is not found in the server’s database cache (a miss) then we respond to the client that we don’t have the answer and that they need to send us the text for analysis. We need to make sure that CloudFlare knows to not cache this ‘miss’ response and so we send back a “Cache-Control: no-cache” HTTP header. When we get a cache ‘hit‘ then our server sends back a “Cache-Control: public, max-age=2678400” header to hint that ideally, we want this response to be cached at CloudFlare for 31 days.

So at this point, the flow now looks like this:

Worst Case

- Client wants to know what ultralinks are present in a paragraph of text.

- Client creates URL from hash pair and contacts the nearest CloudFlare datacenter.

- CloudFlare doesn’t have the requested URL cached and so it passes the request along to ultralink.me.

- ultralink.me does a lookup for the hash pair and doesn’t find it so it responds to the client with a ‘miss’.

- Client packages up the text content and passes it to ultralink.me for analysis.

- ultralink.me analyzes the text, responds to the client with the answer and enters it into it’s cache.

Good Case

- Client wants to know what ultralinks are present in a paragraph of text.

- Client creates URL from hash pair and contacts the nearest CloudFlare datacenter.

- CloudFlare doesn’t have the requested URL cached and so it passes the request along to ultralink.me.

- ultralink.me does a lookup for the hash pair and finds the answer so it responds to the client with it.

- As it passes back through CloudFlare, the

Cache-Controlheader hints that this result should be cached by CloudFlare’s datacenters.

Best Case

- Client wants to know what ultralinks are present in a paragraph of text.

- Client creates URL from hash pair and contacts the nearest CloudFlare datacenter.

- CloudFlare now has the result cached and so it just serves it directly back to the client.

Invalidation Nation

Now we effectively have CloudFlare serving as a database read cache! But there is more to be done. What if the ultralink database changes so that the results for a given text chunk are now old and stale? In-between the client and the ultralink.me database we have 3 different caching layers that can potentially be at work:

- ultralink.me database cache.

- CloudFlare cache.

- Web browser or other client level cache.

When the the answer for a given text chunk (and in turn, hash pair) becomes out of date, we need to make sure that cache entries for that hash pair at all three levels are invalidated and cleared. The first cache is easy enough. We simply delete the cache entry in our own database. If the third level is in a web browser then we can’t do too much about it other than to make sure that CloudFlare’s Browser cache expire TTL setting is as low as our plan allows. Then it is simply a matter of waiting for it to expire in the web browser. As for the second caching layer, we can take advantage of CloudFlare’s API to invalidate specific URLs when they become stale.

The database server can use the zone_file_purge API call to invalidate URLs from CloudFlare’s cache one by one. All you need to do is pass it these arguments:

| a | zone_file_purge |

| tkn | (your CloudFlare account API key) |

| (your CloudFlare account email) | |

| z | (your site domain) |

| url | (the URL you want invalidated) |

CloudFlare makes no guarantees as to how long it will take to invalidate their cache(s) for a given URL. In practice though, we have found it to be pretty quick. You may want to keep a close eye on the return codes for your zone_file_purge calls because if you invalidate a lot of URLs very quickly then you can bump up against their API rate limit. I have filed a request with CloudFlare to augment their API with a call that allows us to batch send URLs for invalidation. This would prevent the likelihood that the API rate limit will be hit and greatly reduce the overhead associated with individual invalidation requests. If you want this too, feel free to let them know and Submit a Request.

Victory Dance

There we have it! CloudFlare is now absorbing the majority of our database read while also staying up to date with the latest results. So if you have a database load that is read-heavy, does not require authentication and can re-structure your interfaces around URLs then give CloudFlare a try (their free account is very generous). See if they can’t lighten your load.