Caster for iPhone: A Postmortem | |

| October 31st, 2009 | |

|

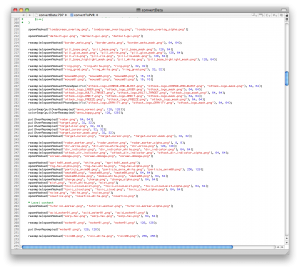

Along with the audio we started working on the asset conversion script. The conversion script’s first job was to convert the existing ogg sound files (we had the original wav files somewhere but at the time it was easier to just source off of the Desktop assets) into wavs using oggdec and then used afconvert to convert them into ima4 caff files. The next big job was to convert textures over to take full advantage of of the PowerVR’s optimized texture format PVRTC using texturetool. We added a PVRTC texture loader onto our texture manager’s standard loaders so that if it saw a .pvrtc version of the file it was looking for it would favor that one first. That way we could progressively convert texture assets as we pleased. We also added a debug texture that we used to indicate missing or unconverted textures. One major issue we faced was that a lot of the in-game textures are generated on the fly at runtime (multiple independent textures are tinted, masked, blurred together, etc). We found that this was taking a fair amount of time during asset loading and was going to be unwieldy to attempt a runtime PVRTC conversion. So we started working on an image compute tool that would perform the same texture construction operations that occur in Caster at runtime and output a finished file that we could load instead. There was also the companion code in Caster that would look for a pre-computed texture and favor that instead of creating the texture at runtime. We decided that the disk space hit of storing many similar textures was worth the savings during loading and the ability to use PVRTC formatted textures. The asset conversion scripts would for each individual graphical asset, define such things as the optional image computation behavior (using our imageCompute tool), texture format (texturetool), resolution (sips) or just pull them over unmodified. Tweaking the conversion policies for all of the graphical assets was something that we spent a lot of time on. We found that some textures tended to look better uncompressed than others, some could be downsampled significantly while others needed to stay at a fairly high detail. It was mostly a game of “let’s see what it looks like if we do this” and feeling our way around what seemed to work best. Getting the graphical assets retooled helped a lot in terms of both rendering performance and disk space hit. It also significantly cut down resource loading time and helped pull the launch time down to a reasonable amount.

At the same time that we were refining the graphical assets we were also making great strides in re-tooling the graphics code to do things the Open GL ES way. We started to convert things that used immediate mode to using arrays that we would send up to Open GL ES. For the desktop version we decide to use immediate mode after doing performance analysis on older hardware. Immediate mode was just as fast as buffers if not faster in some cases because of driver optimizations. Since our models were relatively low poly, it never made sense to shy away from immediate mode on the desktop (and still doesn’t). We also started to experiment with cutting down the draw distance which had a huge positive impact on framerate but progressively made you feel a little blind during gameplay. By this time in the porting process we could run around through a lot of the levels in the game with our rudimentary control code. Things mostly looked and behaved fairly close to what we expected but there were still a lot of rough patches. We hadn’t really even started on the control scheme and things were still running fairly slow.

Because we had arrived at a point where we could start playing the game on iPhone OS devices we started experimenting with various control schemes. Most of the control schemes that appealed to us were centered around the idea that you use both thumbs on the opposite sides of the screen. However, we knew that we didn’t want any static graphical control overlays. We eventually settled on having invisible floating nubs on the left and right sides of the screens that would move depending on the location of the initial thumb contact. Double tapping and holding would perform the appropriate functions depending on the side of the screen you tapped on. It ended up being something we were very proud of. It was very natural, tight, fast and responsive. At one point in development we experimented around with optional use of the accelerometer to trigger jumping and while it worked fairly well we thought its specific interface might confuse people and it also didn’t work very well in bumpy environments such as a train or a bus where it would get triggered as a false positive. We think that we got the controls just right for our genre although control remains something very controversial on the iPhone and it seems that most people either think our control scheme is perfect or just don’t get it at all.

In addition to the in-game controls, we spent a lot of time reworking the menus and other interfaces. The menus needed to be completely re-tooled and re-thought because of the small screen size and less screen real estate. We ended up blowing a lot of buttons up proportionally and simplifying many screens into their core components. There were also other interesting considerations like the fact that there is no concept of a cursor “hover” on the iPhone. We used cursor hover on the desktop versions for various purposes and had to figure out ways around that concept on a couple of screens.

We then looked into getting music support up and running using AVAudioPlayer so that we could take advantage of the audio hardware (it is an iPod after all). It was fortunately a fairly painless process to get working. Like the sounds, we converted the music from its native ogg format into caff files and then into aac format.

At this point we started a gradual process of ripping SDL out and replacing the functionality that it gave us with calls to the native iPhone OS APIs. SDL had served us well on the iPhone and we actually ship with SDL on the desktop versions. However, because we wanted to do some things that required source hacks on the SDL side and we wanted to shed some of the overhead that SDL incurred we decided to phase it out. In general we also wanted more control over the things that SDL abstracted away. The first thing we took direct control of ourselves was the input handling. One interesting thing that we found with the multi-touch control handling was that the tapCount of a particular UITouch object would not increment if you had a separate UITouch down at the same time. Because two of our most basic functionalities were based on double tapping (double-tap on the left side to dash, double-tap on the right side to shoot) that meant that we could not shoot if we were already dashing and conversely we could not dash if we were shooting. We didn’t know if this behavior was intentional or not but it is critical to gameplay that we have the ability to do both. The fix was simple enough, we just tracked the time between the last time we saw a particular UITouch come down and used either that time measurement or the tapCount of 2 to initiate a dash or an attack. Fortunately this behavior was changed in iPhone OS 3.0 to increment the tapCount as we originally expected.